|

|

||||

|

|

||||

|

Deep and Shallow Networks |

||

|

When and Why Are Deep Networks Better

than Shallow Ones? (Hrushikesh

Mhaskar, Qianli Liao, Tomaso Poggio) |

This paper describes technically very

well what are the advantages of Deep Learning technology

over Shallow neural models. The document describes when the DL is

preferable and why. It is an in-depth and balanced technical analysis that does not

support those who consider shallow neural models "obsolete" and

those who consider the DL a sort of "alchemy". |

|

|

OUR HISTORY AND OUR THOUGHT We started doing research and

development with neural networks in the 80s and obviously the Multilayer Perceptron with Error Back Propagation algorithm was the

most used model in the first years of activity. In those years there were no

BIG-DATA and the best that could be obtained to do pattern recognition tests

on images was the set of MNIST handwritten digits. The most we could have as an

accelerator for our 33MHz Intel 386DX was an ISA card with a DSP (Digital

Signal processor): being the DSP designed to calculate FFT (Fast Fourier

Transform), it was optimized to speed up multiplication and accumulation

operations (MAC) and this feature allowed to speed

up the operations on the single neuron.

Intel

386SX and an ISA board with DSP (Digital Signal Processing) We developed alternative and additional

methods of Error Back-Propagation algorithm such as Genetic Algorithms and

Simulated Annealing which were automatically triggered to overcome local

minima. We dreamed of giving the image pixels

directly to the network, assuming to use at least ten Hidden layers: there

was the software ready to do all this, but there were not enough images and

the memory and computing power of the computer we had were orders of

magnitude smaller than required. Despite these limitations, with a

Multilayer Perceptron with two Hidden layers we

have created one of the first systems of Infrared Image Recognition for Land

Mine Detection. In a second phase we mainly used

shallow neural networks with supervised and unsupervised learning algorithms.

From Adaptive Resonance Theory (ART) to Self Organizing Map (SOM), from

Support Vector Machine (SVM) to Extreme Learning Machine (ELM), from Radial

Basis Function (RBF) to classifiers with Restricted Coulomb Energy (RCE). Currently we think that the Convolutional Neural Networks (CNN) which are nothing more than the evolution of Kunihiko

Fukushima's Neocognitron (1979) are the most

effective solution for image recognition where large labelled datasets are

available. There are

quality control contexts in the industrial environment in which the number of

images available is not sufficient for the use of a solution with DL and,

therefore, other methodologies must be used. DL technology has amply proven to be

extremely efficient but equally vulnerable and therefore unreliable in

safety-critical applications (DARPA GARD Program).

An

example of Deep Learning deception There are contexts in which for

ethical, moral reasons or even of necessary cooperation between man and

algorithm, in which the inference of a neural network must be explainable

(DARPA XAI Program). There are contexts in which current GPUs cannot operate (AEROSPACE) because they are too

vulnerable to cosmic radiation and we need to develop algorithms capable of

being efficient on radiation-hardened processors typically operating at very

low clock frequencies.

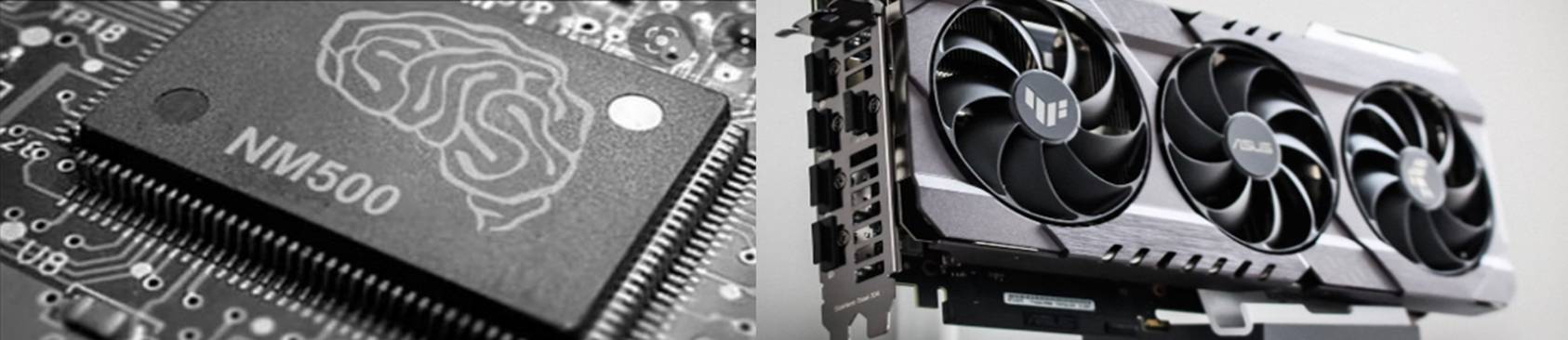

A GPU

for Machine Learning |

OUR CURRENT TECHNOLOGY An application based entirely on DEEP

LEARNING is unable to learn new data in real time. As effective as this

solution is, it can be specialized for a single task and cannot evolve in

real time based on experience, as the human brain does. In fact it is a

computer program written by learning data. Our technologies derive from neuronal

models based on three fundamental theories: 1) Hebb's

rule (Donald O. Hebb) 2) ART (Adaptive Resonance Theory)

(Stephen Grossberg / Gail Carpenter) 3) RCE (Restricted Coulomb Energy)

(Leon Cooper) We are inclined of using Deep

Learning technology only in the field of image processing but our technology

uses DL only as a tool for the extraction of features (e.g. DESERT™). In

image processing it is decidedly more critical to obtain explainable

inference also using traditional features extraction methods. The decision

layer is always based on our SHARP™, LASER™ and ROCKET™ classification

algorithms. In this way we combine the potential

of Deep Learning technology with the continuous learning ability of our

classifier models. This approach also allows for greater robustness of the

inference that is no longer bound to the Error Back-propagation algorithm. Reading the text "When and Why

Are Deep Networks Better than Shallow Ones?" you can understand that

shallow neural networks can solve the same problems as deep neural networks

as both are universal approximators. The price to

pay for using shallow neural networks is that the number of paramaters (synapses) will grow almost exponentially as

the complexity of the problem increases. The same does not happen for a Deep

Neural Network. If we analyze the true numbers of the

parameters necessary to solve the most complex problems (currently solved on

the current Deep Neural Networks) in a shallow neural classifier, we realize

that the problem is the lack of a SIMD (Single Instruction Multiple Data)

machine that can process all those parameters simultaneously. Are we therefore facing a

technological problem? Yes, just like when there were no potentially adequate

GPUs to implement learning processes with deep

neural networks. In fact, we cannot have SIMD processors

with millions of Processing Elements (PE) and even less with billions of

Processing Elements: these devices would be enough to overcome the leap in

computational capacity between deep neural networks and shallow neural

networks. But what technology has reached these numbers? RAM memory and FLASH

memory technologies. MYTHOS™ technology uses memory to

exponentially accelerate the execution speed of prototype-based neural

classifiers. MYTHOS™ technology is convenient when the neural classifier has

to learn BIG DATA or HUGE DATA: the scan time of the prototypes will increase

linearly with respect to an exponential increase in the number of prototypes. MYTHOS™ technology together with Neuromem® technology and NAND-FLASH memory allow to carry out a "broadcasting" operation of the

input pattern on billions of prototypes in a constant time. Our technological response is

application dependent. We are mainly oriented towards

defence and aerospace applications, where we want to use MYTHOS™ technology

with Radiation Hardened CPU. We design and build hardware solutions aimed to

support DL in RAD-HARD devices for aerospace applications.

General Vision Neuromem® with

5500 neurons

General Vision Neuromem® with

500 neurons BAE SYSTEMS RAD750™

MIND™ with shallow (Neuromem®)

NN accelerators and deep (TPU) NN accelerators is a RAD-HARD AI device for

aerospace applications |

|

|

LUCA MARCHESE Aerospace_&_Defence_Machine_Learning_Company VAT:_IT0267070992 Email:_luca.marchese@synaptics.org |

|

Contacts_and_Social_Media |

|

Copyright_©_2025_LUCA_MARCHESE |